Three paradoxes of AI in education and how to handle them

AI is an education revolution. But are we using it in the best way to boost learning? Let's break down three paradoxes of the current way AI is used in education.

"It was the best of times, it was the worst of times." Charles Dickens wasn’t talking about AI, but he might as well have been.

AI is already changing education. According to OpenAI, college students use ChatGPT more than any other user group. Some use it as a study assistant, others to generate flashcards or rewrite essays. Edtech leaders talk about boosts in “efficiency” and “personalization.” The possibilities are endless. It’s hard to not see this as the biggest revolution in educational thinking in the last 100 years (maybe in thinking, full stop). But something doesn’t add up.

A recent MIT study found that students who relied on AI scored worse on critical thinking. The Flynn Effect, or the trend that describes how average IQ has been increasing globally across the last century, seems to have peaked and started reversing in several developed countries. AI feels like a gift to education, but it often works against how learning actually happens. Not because it can’t support learning. Because learning isn’t its core design.

Barbara Oakley wrote in a recent study about the “memory paradox”: by offloading our knowledge onto tools such as AI chatbots, we are actually preventing long-term memory formation and, with it, decreasing mental flexibility. Carl Hendrick’s comment on it made a sharp point: memory is still the backbone of learning.

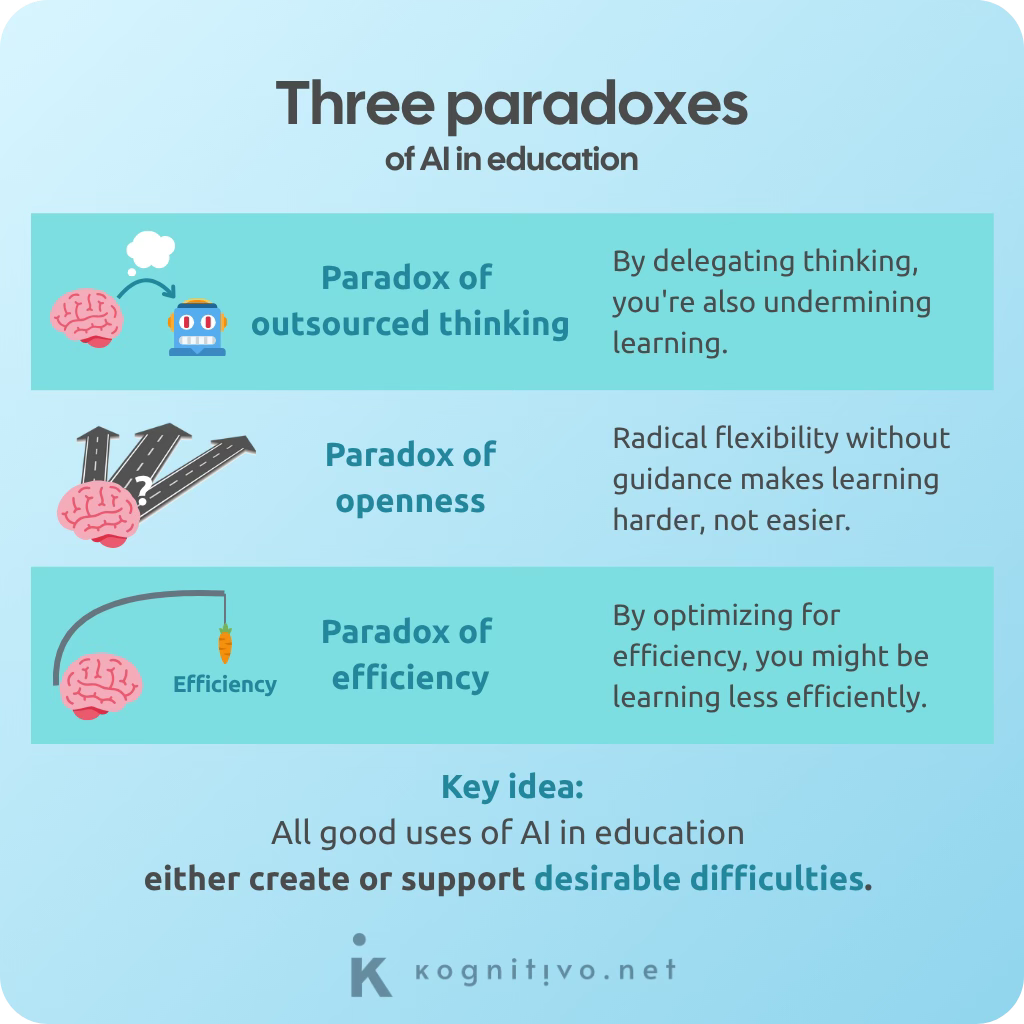

I found the framing around “paradoxes” to be quite on point at capturing something essential about how AI is used in education: well-meaning tools often produce contradictory outcomes. I’d like to unpack three additional paradoxes I’ve observed and what we can do about them.

1. The paradox of outsourced thinking

The MIT study found that students using AI to write essays couldn’t recall what “they” had written. What happened here is the AI version of the illusion of fluency: when a chatbot hands you the answer, it feels like your brain did something. It didn’t. When you have to solve that problem later, you realize that you're actually dependent on the AI and that you didn’t really learn.

Even retrieval practice, one of the most effective learning techniques, loses its power if you let AI do the recalling. However, when students do use AI to help them retrieve (not the other way around), they learn more. Because your doing the retrieval was the whole point all along!

This raises a few uncomfortable truths:

Getting to an answer isn’t learning.

Completing a task isn’t learning.

Real learning is about overcoming the right kinds of struggles that make us think, stay cognitively engaged and activate deep processing. If done properly, that thinking gets encoded and reinforced as long-term memory. This is what cognitive scientists call desirable difficulties.

Paradox of outsourced thinking: by delegating thinking, you're also undermining learning.

2. The paradox of openness

The openness, flexibility and versatility of AI chatbots is one of their powers: they can solve virtually any issue or provide support on any kind of problem. However, that very openness becomes a problem when it comes to learning.

Proper learning needs scaffolding: presenting simpler knowledge first, offering a warm-up problem or layering concepts before introducing complexity. It’s by adjusting the scaffolding that we can get to the optimal challenge point. Structure is fundamental to great learning experiences. But chatbots don’t scaffold by default. They don’t pace unless asked to.

Certainly, this can be improved with good prompt engineering or by providing context to the AI. And motivated learners do exactly that. However, a study from last year showed that learners with less experience using AI struggle more with the implementation of AI chatbots than those who were proficient. The study also showed that the struggle is considerably reduced if the chatbot offers scaffolded or guided interactions.

Expecting every learner to proactively shape their own learning experience and master prompting to access quality learning isn’t just unrealistic. It misses the point of what kind of user most learners are. Learners should benefit from any learning tool without needing to master the art of tweaking it. Otherwise, we’re creating accessibility barriers without realizing it. Students with lower metacognitive abilities and technical skills are being left out. And these students are precisely the ones who need more support.

Paradox of openness: radical flexibility without guidance makes learning harder, not easier.

3. The paradox of efficiency

Efficient learning is the objective of any learning tool. This means that every learning app, website or material should aim to provide (1) the highest possible quality in (2) the shortest amount of time. When we say that we want to optimize efficiency, we usually mean increasing speed without sacrificing quality.

But when it comes to AI in learning, optimizing for efficiency often leads us to streamline every step in the process to get to the learning outcome faster. Streamlining and optimizing typically mean removing obstacles and blockers. And as we've seen, it's difficulties (of a certain kind) that precisely make learning happen.

If we define efficiency as speed plus quality, and AI already handles speed, then the real challenge is improving the quality to match non-AI learning experiences. Ironically, that means creating more friction and adequate learning challenges, not less. Supporting effort and productive struggle, not eliminating it. So, even though efficient learning is the ultimate goal, focusing on efficiency might be what prevents achieving it.

Paradox of efficiency: by optimizing for efficiency, you might be learning less efficiently.

How to handle these paradoxes

Regarding AI in education, it’s now a commonplace to hear people say, “It depends on how you use it.” But when pressed on what that actually means, those same people struggle to provide a clear, straightforward answer.

Here’s the simple answer:

All good uses of AI in education either create or support desirable difficulties. That is, AI is well used when it gives us learners the kinds of cognitive struggles that keep us mentally engaged.

Use AI to support and shape effort, not skip it. Let it quiz you, space your practice, challenge you, interleave topics or give hints, not answers. Learning happens when you wrestle with ideas, not when you copy them. But not every struggle is useful: AI tools shouldn't offload the responsibility of designing the learning experience onto learners. That’s an undesirable difficulty. Scaffolded, structured learning should be the default.

For AI to have a positive impact on our learning experience, learning tools need to be designed to guide us by default into the cognitive struggles that help us learn, even when we don’t know what to ask (which is the most common starting point!).

As it happens, OpenAI is already working on a "Study with me" mode for ChatGPT and Anthropic is introducing Claude for Education as well. Other tools are experimenting with Socratic dialogue as an alternative to prompt-heavy interaction. And many learning apps are starting to embed AI-driven experiences into structured learning plans, where the plan provides the pacing and scaffolding. So, there’s real movement in the right direction.

The real lesson behind the hype

AI in education isn’t a miracle. It’s a mirror.

It reflects back all the tensions we never resolved: speed vs depth, freedom vs guidance, ease vs effort. The good news? It also gives us a genuine chance to rethink learning from the ground up.

Not by removing difficulty. But by designing it on purpose.

AI won’t do the learning for us, but it can finally help us struggle better.

Keep learning

Prompt suggestions. Always ask follow-up questions:

I want to use AI to study more effectively. Can you help me design a study session where the AI supports desirable difficulties instead of bypassing them?

Act as a learning coach. I’m worried that using AI is making me too passive. How can I tell if I’m outsourcing too much of my thinking and what should I change?

Explain in simple terms the paradox of efficiency related to the use of AI in education. Then help me come up with one concrete way to use AI that doesn’t fall into that trap.

I want to consider multiple perspectives. Find 3 experts with different points of view on the impact AI is having in education and compare their opinions.